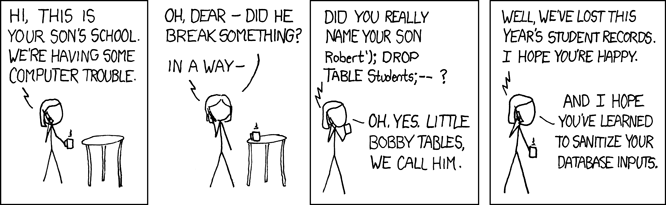

What is a SQL Injection attack?

Two days ago I interviewed a developer that was applying for a junior software developer position.

One of the questions that every interviewer must always ask is about the security of the data.

So, the developer answered the question by referring me to this comic:

The Eight Fallacies of Distributed Computing

Almost everyone, when they first start building a distributed application, make the following eight assumptions. All are proven to be false in the long run and cause trouble and painful learning experiences:

- The network is reliable

- Latency is zero

- Bandwidth is infinite

- The network is secure

- Topology doesn’t change

- There is one administrator

- Transport cost is zero

- The network is homogeneous

Keep the points above in mind when you design a distributed application. They are fallacies that increase the maintenance costs of your distributed application.

Closures in the Task Parallel Library, what are they? …beware of race conditions.

Up to .NET 3.5 multi-threading programming had it challenges. Multi-threading is a way to improve application performance and responsiveness by running long operations in a different thread from the main application thread.

Up to .NET 3.5 the parallel threads created by an application domain only targeted a single CPU core by CPU affinity. We also know that CPUs (Central Processing Units) couldn’t increase the processor clock without melting the integrated circuits away and requiring bigger cooling fans. So, the hardware evolved into placing several CPU cores in parallel to increase computing power.

The task parallel library introduced in .NET 4.0 responded to the need of catching up with the hardware capabilities and as a way to execute parallel operations in different cores.

Each task created by the TPL has its own stack and a thread or set of CPU threads.

When creating a task, a common code snipped to launch a task using a Lambda expression as an Action is:

1 2 3 4 5 6 | Task.Factory.StartNew( () => { statement1; statement2; } ); |

However, very rarely a newly created task will receive no data from the method invoking it.

It is more likely to have the following code inside a C# method calling a parallel task:

1 2 3 4 5 6 7 8 9 | ... int y=45; Task.Factory.StartNew( () => { y++; } ); ... |

y is a variable that is passed to the parallel task for further processing.

Now, what would be the value of the variable y when the task finishes running?

Is y passed by value or by reference?

After the task completes the value of the primitive y is 46. y is indeed passed by reference. These variables passed to a task receive the name of closures.

Now, from multi-threading programming you might remember that objects that were shared between several threads could end up with a value that was not predictable, this was the dreaded race condition. There were ways to mitigate this condition such as using the lock statement to avoid concurrent threads affect the state of the object in an unpredictable way. Threads compete for CPU time and there is no guarantee that they will execute sequentially or in a predictable order.

You can also cause race conditions with closures in the task parallel library. Let’s see how:

1 2 3 4 5 6 7 8 9 10 | ... int y=45;</code> Task.Factory.StartNew( () => { y++; } ); ... Console.WriteLine(y); |

In the example above, y won’t always be 46.

Once the task is kicked off, it is set in queue for processor time, and so is the main thread where the method that starts the task is.

They both compete in parallel for CPU cycles and run as parallel as possible. The executing code gets forked. If the main thread runs first while the parallel task is waiting in queue, the value of y will be displayed on the console as 45. If the task runs before the main thread makes it to the Console.WriteLine statement, the displayed value will be 46.

This is a typical race condition.

You have several ways to mitigate this condition in the TPL. One of them is shown below:

1 2 3 4 5 6 7 8 9 | int y=45; Task t = null; t = Task.Factory.StartNew( () => { y++; } ); Task.WaitAll(t); Console.WriteLine(y); |

The example above will always show 46 in the console.

Book Collection for a Junior Architect

Design pattern books:

1. C# 3.0 Design Patterns

2. Design Patterns: Elements of Reusable Object-Oriented Software:

3. Patterns of Enterprise Application Architecture

4. Microsoft .NET: Architecting Applications for the Enterprise (Pro-Developer)

5. Microsoft Application Architecture Guide (Patterns & Practices) (This one can be downloaded for free as PDF from msdn)

6. Enterprise Integration Patterns: Designing, Building, and Deploying Messaging Solutions (This book is really good for specializing in middle tier aka services, queues etc)

7. Core J2EE Patterns: Best Practices and Design Strategies (2nd Edition)

Domain Model Pattern (These books are dealing only with the DM pattern)

1. Applying Domain-Driven Design and Patterns: With Examples in C# and .NET

It has examples in C# 2.0 but the concept (DM) is still valid nowadays.

It has examples in C# 2.0 but the concept (DM) is still valid nowadays.

2. Domain-Driven Design: Tackling Complexity in the Heart of Software

This is a classic although the book is a bit dense, see the examples. The book is narrated as a story when the author was developing an application for embedded electronic circuits.

This is a classic although the book is a bit dense, see the examples. The book is narrated as a story when the author was developing an application for embedded electronic circuits.

Inversion of Control Pattern/IoC:

Internet Architectures (Load balancing, redundancy, ways to scale out)

4. Scalable Internet Architectures

These two are “must have”references, specifically the UML one:

6. UML Distilled: A Brief Guide to the Standard Object Modeling Language (3rd Edition)

7. Refactoring: Improving the Design of Existing Code

And to translate from the Object Oriented World, Class Diagrams and UML notation to the Entity Relational World with Entity Relationship Diagrams:

9. Refactoring Databases: Evolutionary Database Design (Addison-Wesley Signature Series (Fowler))

Thanks to Srinivasa Tammana for asking me to put this list together. I own other titles but they are more detailed to a particular database technology or programming language and the books here are long time favorites.

Cheers

Lizet

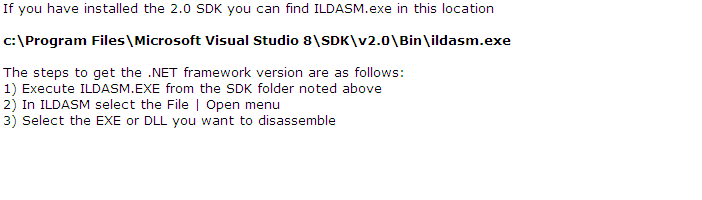

Determining the .NET target version of a dll or .exe assembly

It’s been a while since I blogged. It’s good to be back.

I switched job descriptions and became an application architect, which means, I get to decide how solutions are designed…and if the solutions won’t work, the blame goes on me :-p

Now seriously, I was reviewing old posts and realized WordPress didn’t make a good job at keeping my old code snippets from Blogger :(, the formatting is way off and you can hardly see the code properly. Oh well.

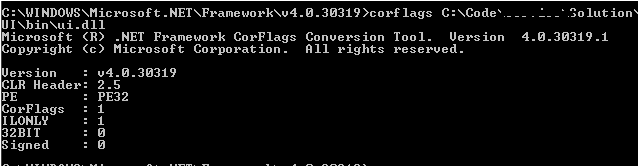

Today we had a deployment issue, one of our projects was compiled to the wrong target framework. When we compared properties and sizes we realized this was not the ordinary dll size, but how could we determine the actual target framework?

The windows explorer properties or the IIS properties are of no use in this case, giving you only the build number.

There are actually two main options with two utilities distributed on the SDK to determine the target framework of a dll or exe:

- ILDASM.exe

- CorFlags.exe

and if you want to use CorFlags.exe:

Happy coding 🙂

L.

Today is Remembrance Day in Canada

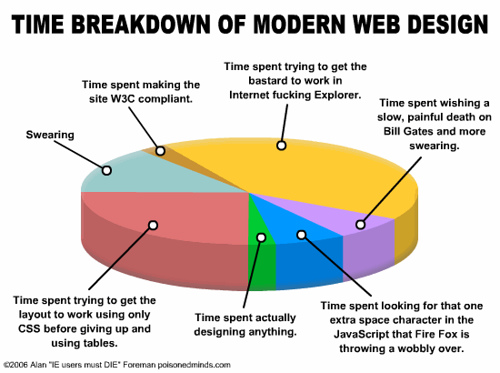

How time is spent on web development

Dear reader,

Ok, the image above is pretty… and pretty hilarious as well. I must say that some of these time lines are true though. No, I don’t hate 100% IE, I still think it was the first browser that introduced the XmlHttpRequest as part of JScript in a very early version (maybe IE4) during the Netscape vs IE war. Somehow IE prevailed and also enabled the Ajaxian websites we see today. If you remember how bad it was to make a site work in Netscape, you’ll understand why I’m glad it died in 2002 :-p

That being said, the time lines are somehow accurate. Unfortunately web development with HTML rendering on a browser has many places where things can go wrong, and prototyping is just a smaller time, even if the prototype looks real and very cool and I’m referring to either a pure HTML prototype or a set of images created with a prototyping tool)

If you inherit an existing CSS and a certain master page or left navigation, things can get complicated if you don’t keep your styles pruned out, and if you use pure CSS, introducing new elements in an existing page can be a nightmare, the existing elements can shift easily.

If you add to that, that FF and IE have a different box and a different way to represent positioning (margin and padding), things can get messy for cross browser compatibility.

JavaScript also requires extra effort and strings without escaping quotes, for example single quotes can break anything injected on the JavaScript when the DOM is created on the document create event in the browser.

In the past few years, the outcome of JavaScript libraries such as JQuery, Dojo and GWT have made the life of developers easier.

I wonder if in a few years the world will move away from HTML/JavaScript and go to web sites developed with alternate means, ie Silverlight/Flex…how would the pie chart above look like?

Cheers!

PS. The subject of this blog post might not necessarily align with my employer or coworker’s opinion and it is given only as a comment. Oh, and I never swear…

Estimation, that part of a project every developer dreads…

I was reading the IASA forum and found this article that summarizes a few truths about project estimation. I’ve been more than once haunted by time estimates I’ve given and I tend to be very conservative, to the point of having my estimates always frown upon.

Here are some tenants:

1. It is relatively easy to estimate what you know.

2. It is difficult to estimate what you know you don’t know.

3. It is very difficult to estimate what you don’t know you don’t know.

And here is a thought that makes more sense to me. Having tried waterfall, mandate, wishful thinking from management etc in my many years as developer, this to me makes more sense:

Agile is not an estimation methodology in and of itself, and it does not generally develop estimate in units of time. Instead, estimated units of work are used. The units of work do not directly map to time until you have calculated your team velocity. Once the velocity is determined, it can give you a pretty good idea as to how long it will take to complete your project – that is for the body of work in the project that you know of and understand.

And here is the link to the complete article, it is worth reading every line with attention :)…

Why I find iPad a little bit dull…

1. No penmanship recognition

2. No stylus, it must be hard to go on an inspection or to a patient’s house and type your notes while you hold the iPad, with a single hand, hand write recognition has been there on tablets for a while.

3. No Flash support.

4. Hard to program on.

5. Censorship by Apple of the apps you are able to run on.