As part of my job I help developers take a closer look at the source code and analyze it under the “microscope”. Part of this analysis is profiling the performance of different components on a solution for CPU usage, Network usage, IO and Memory usage. Trying to pinpoint areas of the code that consume the resources and see if there can be optimizations. This is what is known as profiling an application or a solution.

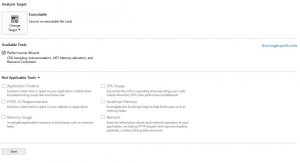

Visual Studio 2017, Community, Professional and Enterprise editions, all offer profiling and performance analysis tools. They cover a variety of languages, and types of targets to be profiled. The image below shows the different profiling targets that can be analyzed with the Performance Profiler.

In the world of DevOps, part of the build automations are done using scripting languages, and one of them is PowerShell. After one of the training sessions on performance analysis and profiling with VS 2017, the question was posed:

How can we analyze the performance of PowerShell scripts to determine the areas of the code that consume the most CPU and take the most time to complete?

The main aid that the VS 2017 perf tools offer is the ability to show the source code that takes the most CPU utilization (identifying these sections of the code as “Hot Paths”) and the areas of the code that will place the most objects in the heap without being garbage collected by any of the three garbage collection cycles (memory leaks). VS 2017 profiling tools and diagnostic tools can also analyze multi-threaded applications or applications that use parallel tasks. But how about profiling PowerShell code? How can a similar profiling be done to PowerShell source code to look at CPU and Memory utilization?

Visual Studio 2017 does not offer a specific profiling wizard or GUI for PS to correlate the OS CPU performance counters and the Memory counters with the PowerShell script code.

That being said, you can still profile PowerShell code, it’s not as easy though.

Using PowerShell you can still access the CPU counters and Memory counters available in the operating system.

This can be done using the System.Diagnostic namespace or in versions of PS 3.0 to 6.0 you can use the PS cmdlets in the namespace Microsoft.PowerShell.Diagnostics

You can also use the Windows Management and Instrumentation cmdlets, but the recommended way for profiling a process on remote hosts is to use the WinRM and WSMan protocols (newer protocols) and their associated cmdlets.

These were the only references I’ve seen on the web regarding CPU and Memory analysis of OS processes using PowerShell:

https://stackoverflow.com/questions/25814233/powershell-memory-and-cpu-usage

Now, for using the WMI protocol on a host, the WMI windows service needs to be up and running and listening on TCP/IP port 135. WMI is an older protocol built on top of DCOM, and some hosts have this windows service stopped as part of the host hardening.

WinRM is a service based on SOAP messages, it’s a newer protocol for remote management with default HTTP connections listening on TCP/IP ports 5985. If the connection uses transport layer security with digital certificates the default HTTPS port is 5986.

WMI, WinRM and WSMan only work on Windows Servers and Windows Client Operating Systems.

One needs to inject profiling like cmdlets directly into the PowerShell code to find the code hot spots that cause high CPU utilization. This can work but then one needs to remember to either comment out or delete the direct instrumentation when the PowerShell code is run in the production environment.

If you have profiled your PowerShell automation scripts some other way, we’d love to hear your experience.

Happy coding DevOps!

I saw your list of PowerShell profiling resources and I have one more to add to your list. Chronometer: PowerShell line by line execution times

Chronometer analyzes a script or module during execution and reports line by line execution times. It allows you to see your code coverage and where most of your execution time is spent.

GitHub Repository

Wow! @Kevin Marquette, thank you for pointing me to that github repo. This blog post actually came about after a technical talk about code performance analysis. The talk was mostly on managed languages, Garbage Collection, Large Object on Heap etc. But, they had a real need with their deployment automation scripts, to pinpoint the parts of the script taking too long to complete or consuming too many CPU cycles. Some of their automation ran for hours. This project fits perfectly what they were looking for! I’ll try to pass the message along.

Performance measurement scripts that captures processor time and memory utilization for powershell.

“https://communary.net/2014/10/28/measure-scriptblock/”